One elegant way of replacing your .NET interoperability code with something else is by using Azure Functions. Sounds good in theory, but what does it take in practice? And what are Azure Functions, anyway?

Let me not take too much latitude, and let me just say that Azure Functions are a way of running simple pieces of code as a service that you can invoke like any other RESTful web services. And of course, they run in Azure. To learn more about them, follow this link: https://docs.microsoft.com/en-us/azure/azure-functions/functions-overview

Creating them is as simple, as invoking them, so let’s get started with an extremely simple demo that will illustrate how amazingly powerful they are, and why they are a perfect solution for replacing your .NET code with something better.

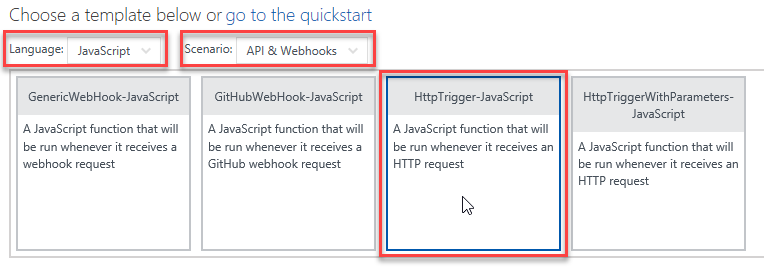

First, let’s create a new Azure Function. Being a JavaScript junkie that I am, it will of course be a JavaScript function. I’ll start from here:

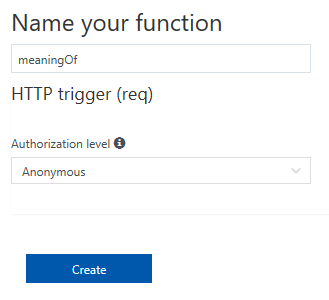

Then, I’ll create a simple function with anonymous access, just for sake of simplicity:

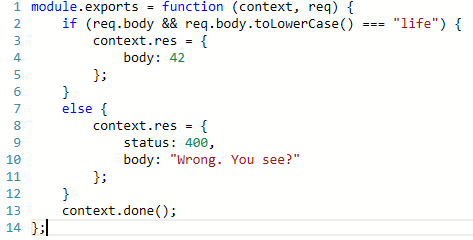

And then, I’ll put some code in my “meaningOf” function:

As simple as that.

Then, I’ll copy the URL of the function by clicking Get function URL > Copy.

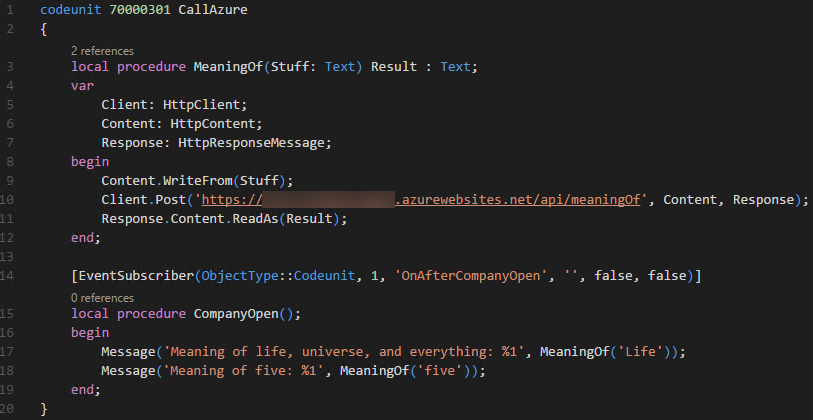

Next step is to create some AL code to consume this function. For that, I start with > AL: Go! and then replace the content of the HelloWorld.al file with this:

As you can see, calling RESTful services with the new Http API, that’s now available at AL language level is amazingly simple:

- Declare an HttpContent variable. This will hold the body of your request.

- Declare an HttpClient variable. This will place the request to the Azure Function.

- Declare an HttpResponse variable. This will receive the response from the service.

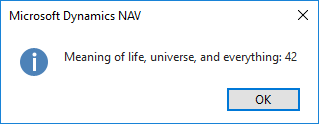

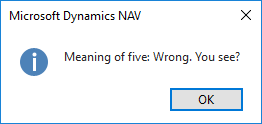

And then, with a few simple lines of AL code you write the request to the body, post it to the Azure Function URL, receive the response from it, and then read the result into a variable. As expected, this reveals the life’s truth:

Now, invoking these functions is easy and they seem to be fast, too. However, how fast exactly are they?

There is a simple rule – your function must be deployed close to your NAV instance for it to be as fast as possible. If you run your NAV and host your Azure Function app in the same data center, you can expect some decent performance. However, if you host your NAV in US, and your Azure Functions in Japan, then your performance will be sluggish at best.

What exactly is “decent performance” and what exactly is “sluggish” can be easily quantified. I have deployed my NAV in an Azure VM in West Europe, and then deployed three Azure Function apps in West Europe, North Europe, and Australia South-East. Then, I have invoked this function a hundred times and I got these figures (averages of three runs):

- West Europe: 2.792 seconds

- North Europe: 13.045 seconds

- Australia South-East: 147.371 seconds

Obviously, there is overhead that’s related to HTTP communication. But depending on what exactly you do, 28 milliseconds per invocation may not be such a big deal for something that doesn’t really have to be invoked constantly. Having an occasional invocation here and there will provide imperceptible speed difference as compared to local, in-process .NET invocation. So, scenarios such as barcode generation, encryption of larger chunks of data, regex validation, bitmap manipulation, or anything else for that matter that you do once per business process, will all behave as fast as they used to. Actually, they won’t, they will be some 28 milliseconds slower, but users won’t perceive that.

However, that’s 28 milliseconds when you are doing everything in the same datacenter. Once your function and your NAV instance are a bit farther away, the latency kicks in and everything is not so wonderful anymore. This means that to have fantastic responsiveness, you’ll have to maintain copies of your functions in multiple datacenters.

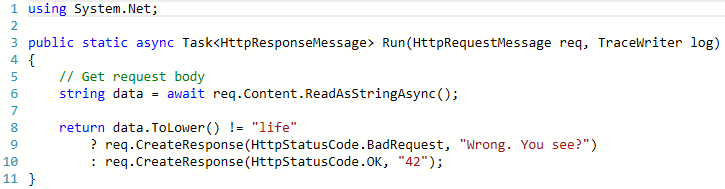

And now it’s time to debunk a myth. A lot of people think JavaScript is “interpreted” and many more believe JavaScript does not perform as fast as “real languages”. So, now that I have an environment that can match JavaScript with “real languages” head to head, I’ve created a C# copy of my function, just to see if I would see significant performance improvement of C# over JavaScript.

Here’s the hero:

Guess what? I didn’t. Guess what else? I actually saw performance penalty of C# over JavaScript. Honestly, I didn’t see this coming, but it seems that Node.js is apparently one hell of a powerful runtime environment. Want numbers? 3.206 seconds for 100 invocations. That’s roughly 15% performance improvement over C#. Lovely Now don’t JavaScript-is-not-a-programming-language me anymore.

Before I close, let me share a dream I had last night. I had a dream that AL code makes invoking Azure functions even simpler. You see, HttpTrigger is just one kind of triggers for running JavaScript, and perhaps a way can be found to create a different kind of triggers, one that can respond directly to an AL invocation from a D365 application using a more tightly integrated language feature than HttpClient invocation. I’d love to see a possibility to invoke Azure functions with simply doing something like Result := CallAzureFunction(‘Name’, parameters…)

A man can dream, right?

Words of wisdom for the end? Not much. Azure Functions are both simple and powerful way to replace your .NET interoperability stuff with something very similar. They don’t perform as fast as in-process .NET does, but depending on what exactly you do, you won’t feel it a tiniest bit. And with a little bit of imagination, you can do crazy things with them. Crazy things.

Pingback: Invoking Azure Functions from AL - Vjeko.com - Dynamics NAV Users - DUG

Pingback: Invoking Azure Functions from AL - Microsoft Dynamics NAV Community

This is nice… All about nodejs etc., it is actually very powerful because of the internal model they use. But…

I can see many drawbacks when comparing .NET interop with calling the API of Azure Functions. One of the most critical points I can see we are losing advantages of the strong datatype control. You can`t use a DLL in NAV as a predefined data structure anymore. I love this possibility… I was working with JavaScript for some time and actually, you have to get used to working with it especially in the case of working on large projects with complex data structures. You don`t have native data type control on build time so much more problems will arise on runtime which is hell.

I was expecting that we will receive the support of generics in NAV but now I can say “Good bye” to the expected feature.

I think you can live with Azure Function as a replacement of .NET interop, actually you can live with almost everything. But you need time to adapt and build some foundation to be efficient in your daily tasks. The problem is that Azure Function will be some transition and temporal into something “much better” in the future so…

I personally hope they will migrate NAV services to Docker stack in the future and will enable again the .NET functionality in NAV (I hope the security concerns won`t be so hot).

Again, this is just my opinion.

You have valid points here, Jakub. This is definitely not a 1:1 replacement for .NET, but it’s still a powerful feature of Azure that’s easily consumable from NAV, and has a lot of potential for future development. I am really looking forward to seeing in which direction it goes.

By the way, I am not sure Docker would solve the .NET problem, because as long as there are more tenants than there are Docker containers, you have the same risks. Also, the possible different combinations of assemblies loaded per container grows exponentially with the number of apps available to users, so unless we end up having a container per tenant (and I don’t really know how difficult that would be) we won’t see .NET in there…

Yes, I understand that we are not talking about 1:1 replacement. And of course, there is a potential.

From my point of view, you can call Azure Functions from “the old” (I mean the current C/SIDE environment) NAV stack. But actually, cannot use .NET directly from the new one (VSCode with AL).

I personally would like to have one unified DEV experience across (one IDE and one common language) and multiple deployments. I suppose MS could use some decorators and configurations. And then would be up to you if you want to deploy on premise or on the Azure stack. Of course, when deploying on Azure stack would not be possible to deploy solutions using .NET interop. But if you have clients they want flexible ERP and use on premise… why would you ban this possibility?

In this scenario, everyone could choose based on what kind of the customers he has (customers with simple business logic and mostly with financial vs. those with complex business logic, manufacturing and even connection to the machinery).

And in the case of Docker and .NET interoperability, I understand that the limitation is multitenancy. But I hope MS will go further because I strongly believe you could achieve multitenancy via multiple containers. Keep in mind that the idea behind Docker isn`t actually nothing super-new. It has a quite long history on Linux (lxc containers) but Docker moves this forward and I can see the concept solve many problems we face nowadays. For example – in NAV world you can work with multiple versions and CUs in your DEV environment without any conflicts. You can build a combination of NAV version + CU automatically in a few minutes and then just instantiate in a matter of seconds.

And the last thing, now about the Azure Functions and the foundation I mentioned before (in the previous comment) – do you think this could be achieved using swagger? I hope so but of course, somebody would need to provide an interpret (swagger codegen). I have not used it practically yet so this is just an idea.

And again, thanks for your blog post. All of them are very very useful.

Well, it’s true that we are now seeing this gap between AL and C/AL, with AL introducing new types that C/AL doesn’t have (yet). It will definitely be a problem for a while, but you can use your AL-built extensions on-prem. You should not be maintaining both the C/AL and AL version of your extension. You should keep in mind that this is still work in progress, and that AL, when it’s released, will be able to replace C/AL fully for your extension development, and then this problem will be a non-issue, so you get closer to your one IDE, one common language idea.

Regarding swagger, I don’t know if it could be achieved, I have never personally tried anything with swagger. The world is full of tools and technologies, and I am getting to old and too busy to be able to check all of them out. From what I read about it, it seems strong and good and all, but how exactly it would work in this scenario, or what would need to be done to enable it, I don’t know.

And you’re welcome, I appreciate it really! 🙂

One word: Excellent!

Thanks for the post – keeping me up to date!

Regarding .NET – I am disappointed that .NET is not considered suitable for inclusion with NAV. I was only just getting enthusiastic with using it mainstream. Ironically I wrote .NET Http Client code last week.

Managed Code !!!!!!!!! – very disappointing…. what went wrong. May as well be win32.

Sounds like a cut down .NET library subset is required, based on required access to resources… Core ?

Well, read my previous post about .NET – it explains the reasons why .NET is gone from Extensions “v2”.

Why isn’t Azure Functions an object type in NAV?

Pingback: ArcherPoint Dynamics Developer Digest - vol 147 - Microsoft Dynamics NAV Community

Pingback: Webinar: Invoking Azure Functions from AL in Visual Studio Code - Vjeko.com

Pingback: Webinar: Invoking Azure Functions from AL in Visual Studio Code - Vjeko.com - Dynamics NAV Users - DUG

Pingback: Webinar: Invoking Azure Functions from AL in Visual Studio Code - Microsoft Dynamics NAV Community

Pingback: The future is already here – 20 Microsoft products that are blazing the trail | navguruwordpress

Pingback: Barcodes & QRCodes in Dynamics 365 Business Central SaaS – Roberto Stefanetti NAV & Business Central Blog