On-prem is where NAV has ran for ages, and where it will still primarily run for a while more. So I test several on-prem configurations with different processors, disks, and memory, to see how these configuration behave in scenarios I described in the original post.

I don’t have too many different hardware configurations to test, but I do have those

- An i7-3770 machine with two OCZ Vertex4 SSD disks and a Western Digital Caviar this or that, and 32 GB of RAM.

- An i7-6700K machine at 4GHz with two Samsung M2 950 SSD disks (at 2.5 GB/s read and 1.5 GB/s write speed), two Samsung EVO Pro 850 (at 500 GB/s read/write), and a Seagate something or other 8TB drive, and 64 GB of RAM.

All in all, I tested these configurations with:

- Data and log being on different SSD disks.

- Data and log being on the same SSD disk.

- Data and log being on the same magnetic HDD drive.

Let’s see the results.

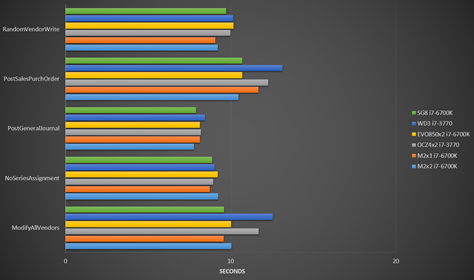

First, let’s see how well these configurations perform in the typical business-logic scenarios. Here’s the chart:

What is obvious right away is that despite different hardware configurations, the performance is roughly the same.

As expected, the M2 disks with data/log separated perform the best, while the old WD Caviar with data and log on the same drive perform the worst, but still, the differences are not so dramatic as one would expect.

Also, the i7-6700K outperforms the older brother, but again – the difference is far from dramatic.

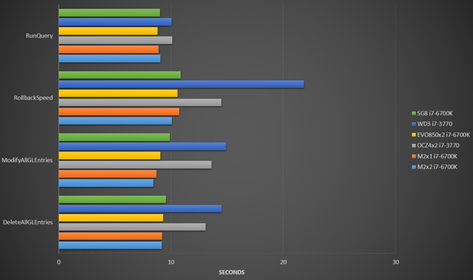

However, let’s not make any conclusions yet, and let’s first take a look at those test scenarios that expose raw SQL performance under conditions for which SQL was designed:

Here, the results are more as expected. Since both physical machines used ample amounts of memory, SQL Server quickly cached all necessary data, so if anything, these tests show that disk speed is less important than fast memory. Old WD HDD struggled with most of tests here, as did the old OCZ Vertex4 (honestly, I expected this guy to show more endurance), but all other configurations showed fairly consistent performance.

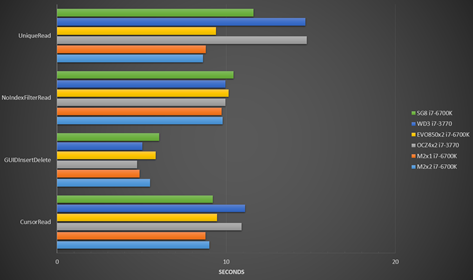

Let’s take a peek at the last group of tests, which expose SQL performance under cruel and unusual conditions that it was not designed to endure:

These results show that disk speed matters when it comes to those queries that are very inefficient to both read and cache; those situations when SQL neither can retrieve records efficiently, nor believes it can benefit from much caching.

However, when it comes to table scans, again it becomes obvious that disk layout does not really matter, and the only thing that matters is the amount of memory available.

These results are the least interesting of all. They show what you probably already know: when running NAV on-prem, beef up the memory, get good disks (all those vast MB/s don’t matter as much as memory does), and you are good to go. Once warmed up, SQL Server will cache most data, and most operations will be blazing fast.

However, while these results might not be too exciting, please keep them in mind, because in my next post I’ll show the performance of Azure VM, and then you’ll be able to get comparison between the on-prem and Azure VM performance.

See you in a few.

A quick side note:

It is just SSD, not SSD drive or SSD disk. Else you are saying “Solid State Drive Drive”. 🙂

But good post, had just hoped you had done the onprem tests with DBs that couldn’t completely be stored in the memory so storage IO would be utilized or instead had forced the SQL instance memory lower.

Have you tried using Azure SQL and Dynamics NAV with DBs at 400GB/200 users?

Thanks for the terminology clarification 😉 It’s the same when people say LCD display or LED diode. Not such a big deal. Anyway, yes – I realize that it would make a lot of difference to measure performance when hardware is pushed to (or beyond) the edge, rather than within its “comfort zone”. I might repeat the tests under virtual machines locally, and then try reducing amount of RAM or available cores or something.

No, I didn’t try Azure SQL with large databases and large number of users. I expect that overall performance in those workload would be reasonable and acceptable. As I point out in the post already SQL Azure is not designed for single-user workloads.

Hi Vjeko,

Thanks for your posts (this one and all the ones before ;-))

Just one question : in your configuration, NST and SQL instance are on the same server? if it’s right, is it a recommandation for production environment to limit network jams?

We are used to create an machine for SQL, one for the NSTs (one NST for client services, one for SOAP/ODATA Services, one for NAS). Do you have any comment on that?

Thansk for your reply.

Best Regards,

Hi Julien. In my tests I did run NST and SQL on the same machine, and it is to eliminate network and related latency from the tests as much as possible. It’s not either a recommendation or a best practice to put everything on the same machine as it limits your scalability and increases your costs. You should definitely have one machine for SQL, and then as many NSTs as you need for different purposes. I merely wanted to eliminate the latency as to make tests (between on-prem and Azure) as comparable to each other as possible, since on Azure you cannot control latency as much as you can do it on-prem.

just wondering if anyone has done similar testing for AWS configs???

pbs@columbusglobal.com

Pingback: NAV performance part 2: On-prem configurations - Vjeko.com - Dynamics NAV Users - DUG