I said a word or two about progress last week. Apparently, VS Code is not the only place where we take a small step back to be able to make a huge leap forward; .NET might seem like another one.

You know it, right? You know that if you want to run your .NET code in D365 for Financials, you are out of luck, and you do know that this applies to as much to Microsoft .NET Framework out-of-the-box types as it does to your own, custom-built .NET assemblies. If you don’t know that yet, then let me bring you up to date: In your D365 apps built on Extensions “v2” technology, you won’t be able to use anything .NET; you simply won’t be able to compile AL code that includes a DotNet variable declaration.

This is neither fake news, nor is it news per se. It has been known at least since October last year when Microsoft first presented AL Language extension for VS Code during Directions US in Phoenix. Soon after the VS Code session there was a round table (in all honesty, I have never seen a table, let alone a round one, at any of round table sessions at any conference) on the topic of .NET future, and the mood was grim. At first everyone thought it was a bad joke, then all held hopes high that Microsoft is simply “feeling the pulse” to see how the channel would react to such a disturbing change. But soon it became obvious that .NET interoperability is on its way to be gently ushered out of the (relevant) technology stack of NAV and that we should start getting ready for the day when it’s not there anymore.

So, what is the current state of .NET in NAV, what is the future of it, and what can you do about it?

Let’s take them one by one.

Current State of Affairs

It’s very simple, in fact. We still have .NET and we have it wherever we need it. It is still there, but it’s on its way out.

For the sake of this article, let me split all our development work (“us” being “partners, VAR or ISV, in the channel”) into two categories: extension development, and all other development. And then, let me split the extension development into two subcategories: extension “v1” development, and extension “v2” development. Once we have the field set, you can at least know what exactly I am talking about.

“All Other Development”

Now that we have the stage set, let me immediately address the “all other development” category. If you are in this category and this category is all that interests you, then you have no reasons to worry. Actually, you have a ton of reasons to worry, just they have nothing at all to do with .NET. As far as .NET goes, nothing has changed for you here, and nothing is changing here, and nothing will most likely change for a possibly very long while here. As long as Microsoft keeps publishing downloadable RTMs and CUs that you can install on or your customer’s box, you will be able to declare, compile, and run DotNet variables of any sort.

Now, I don’t know it for a fact, but I can make an educated guess: when (not if, but when) we get VS Code for general development, not just extension “v2” development, I would dare believing that you will still be able to declare the DotNet variables and deploy code that uses them to non-D365 boxes.

But this is not what you should be doing. You should be shifting your development effort towards extensions, so let’s take a look at what we have there.

Extensions “v1”

All Extensions “v1” development is done in the classic Development Environment and when building the “v1” .navx file you can naturally use the DotNet variables and select types either from .NET Framework assemblies or your own custom assemblies. However (and a big one at that)…

If you are targeting D365 with your “v1” extension, then you must only reference the approved (whitelisted) types and namespaces from the .NET Framework library, and you may only make your own assemblies a part of the .navx file if your own C# code references the approved types and namespaces. If you directly reference a non-approved (blacklisted) type (for example, types from the System.Reflection namespaces) then Microsoft may reject your extension during the validation process. So, you can still use .NET interoperability, but only for “safe” (supported) things.

Which types and namespaces are unsupported, and which aren’t? It’s all explained in the Appendix B of this document: Develop the technical aspects of your app. The wider context of the entire validation process can be found here: https://docs.microsoft.com/en-us/dynamics365/financials/about-develop-extensions

Extensions “v2”

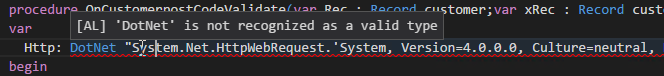

When it comes to building “v2” extensions using VS Code, then the situation is plain and simple: you cannot use the DotNet type. It’s terrifyingly simple, in fact:

As long as your code uses a DotNet variable, you cannot compile it. End of story.

And you should not really hope for a big change to happen here, because it in all likelihood won’t. This is an intentional decision by Microsoft, and not some not-yet-developed-but-still-on-the-table feature that you keep your fingers crossed finds its way into RTM. It is as much off the table as it can possibly be.

What do we do?

Well, first of all, you can come to terms with the fact that as far as Extensions “v2” are concerned, .NET interoperability is gone, and is gone for good. And trust me here: it hurts Microsoft as much as it hurts you. Remember, Microsoft did not remove .NET interoperability because there is an evil conspiracy to annoy partners, and they are now secretly toasting to the cunningness of the idea. No, not by far. The .NET interoperability was probably the most important addition to the development stack ever, and Microsoft is fully aware that removing it will hurt a lot, and that it will hurt partners who won’t be able to achieve everything they could, and that it will hurt Microsoft who will have weaker technology integration across their own technology stack, as compared to what they had before.

Why did they do it, then? They did it because they absolutely had to.

.NET, while extremely useful, is extremely dangerous when being completely open in an environment such as D365. While everything is running on your box, there is not much concern about the .NET code you will run. But when it comes to running your .NET code in Microsoft’s own backyard, in D365 infrastructure, it becomes very difficult to isolate your code in such a way that it can run safely both for you and for others, while at the same time being stable and perform fast.

Consider this: in D365, it’s not just your app that runs. It’s the base app and hundreds, perhaps thousands of other apps, randomly installed by D365 tenants. A malicious app could attempt to pull sensitive data from other tenants. Or it could intentionally try to bring the server down. That part can (somewhat) easily be controlled and malicious apps are less of a problem. It’s the poorly engineered ones with sloppy code that could pose a much higher risk: your badly written .NET code could consume all the resources on the server, it could attempt invalid access to the file system, or do a number of things that make the NST hang. When managing C/AL code, the granularity at which you can manage it is user sessions, you can end individual user sessions and solve the problem. However, with .NET code, the granularity at which you manage it is NST – once an assembly is loaded, and goes berserk, you must restart the NST to fix the issue, and this is unacceptable, because there may be hundreds of tenants using the same NST in D365, and you must not cause downtime to them.

All in all, it would have been mighty difficult for Microsoft to enable us to keep using .NET without exposing them to all the risks that come with having an open architecture that allows you to run your own .NET code in there. They could have easily spent their entire VS Code or Extensions “v2” budget just trying to keep this alive, and they would still probably not be able to do it in a satisfactory way.

So no, don’t hope for the moment when Microsoft comes to their senses and decides to give us our .NET back, because it’s not going to happen. And again, Microsoft is not happy that it has to be the way it has to be.

And no, I am not trying to sell this to you as “an amazing new opportunity”, like a rookie marketer, no… This is crap and I hate it and oh how much I’d have loved if it hadn’t come to this.

But the sooner you make peace with the fact that you won’t have .NET interoperability in “v2”, the sooner you will able to move on and see that the world is not really that black when you cross this hill.

It’s going to be all pink, then, eh?

I am not saying it’s going to be all pink; I am merely saying it is not going to be as black as it looks.

You know, Microsoft is neither naïve, nor malicious. They understand two things: we all (Microsoft, and the entire D365 partner ecosystem) are in the same boat; and we either all succeed and win together, all we just all sink to the bottom together. There is no scenario where Microsoft survives, and the partner channel perishes (at least as D365 goes), nor is there a scenario where partners survive, and Microsoft goes down (again, at least as the D365 business is going). The thing is: very few people need a vanilla ERP, or at least vanilla NAV. D365, in its pristine vanilla flavor is still both too complicated and too generic to be usable directly out of the box. Very few companies are happy with what they can get out of the box, and NAV was never a success because of what it could provide out of the box, but because of high flexibility which allowed high degree customizations which in turn could enable achieving high degrees of fit. Degree of fit D365 for Financials provides to a company using vanilla D365 is too low to be compelling, certainly at the price level where it is (and will be, unless you can once get it for free). The only way customers will be satisfied with D365 is if the apps and solutions provided by partners (us) provide end-to-end, deep vertical support for industry-specific business processes.

That said, for us all to succeed (not just for Microsoft to succeed) we must be able to write those compelling vertical apps and populate the App Source with many (preferably vertical) apps as possible as soon as possible.

And trust me, Microsoft is aware that their decision to remove .NET interoperability from Extensions “v2” stack is not going to make it easier for us to provide those apps, but a great deal more difficult.

So, they’ve decided to make things – those that used to be possible only through .NET interoperability – possible in other ways. Like, for example, giving AL language-level support for them. This has resulted in the providing the support to invoke RESTful web services directly from AL, with built-in AL types, rather than having to go through an interoperability layer. So now you have HttpClient, HttpContent, HttpHeaders, HttpRequestMessage, and HttpResponseMessage types available as AL types. Declaring them and using them is much simpler than it was with their DotNet equivalents. The same has happened with AL support for JSON. Since most RESTful web services simply return JSON, you now have JsonToken, JsonObject, JsonArray, and JsonValue available as AL language-level types.

If you ask me, I’d trade DotNet equivalent types for these AL built-in language types any day. Interoperability is always clumsy. And even though you can typically get more functionality from .NET interoperability than you can get from a built-in type (because for interoperability Microsoft only has to expose existing functionality, where for built-in language-level support they have to specifically program all types and members to be consistent with the entire AL language), the simplicity and safety of using a built-in type (in my opinion) wins over more complex, and possibly less safe, interoperability types.

And the best of all is, Microsoft won’t stop at these nine types for HTTP and JSON that they already support in AL. They are also working hard to provide functionality to us for other areas of .NET that are as safe to use as anything can ever be, and that we need badly, but are now inaccessible. Imagine things like collections, more powerful strings and date/time types, regular expressions, encryption, things like that. Microsoft did not commit to delivering these, but as I said – they not only want to make our life easier; they need to make our life easier – so they are actively looking into how to provide support for those areas of .NET interoperability that we depended upon the most. And they are very open about it – they will gladly accept your input on that, through their AL language github project. If you need to support for some .NET functionality at the language level, don’t hesitate to navigate to http://github.com/Microsoft/AL and then ask a question in the Issues section. Microsoft will treat these as questions, not as feature request, and certainly not like bugs, but at least they will gain a better visibility into what it is in the .NET framework that partners absolutely can’t live with. Without us, that’s an impossible question for them to answer.

And what if Microsoft doesn’t do it for me?

In the very worst scenario of all bad scenarios, you have some .NET functionality that will never be provided as a built-in AL-language feature so you cannot possibly hope to use it, and this makes it impossible for you to build your extension. If this happens to you, you are still not out of luck.

One way to solve your problem, which is both very simple and is the Microsoft-recommended way to go about it, is to use Azure Functions. They are very easy to develop and maintain, their performance is fast, especially in D365 because it also runs on the Azure stack, they are scalable, and they are not that expensive to run. And most of all – they can be written in C#, which means that your investment into developing custom .NET assemblies is not entirely wasted, and that transitioning from assembly-based C# code into serverless, cloud based C# code is going to be a fairly simple thing to do. As long as your .NET code was stateless, you won’t have any significant problems to migrate your C# code over to Azure, and even if it wasn’t entirely stateless, you can still retain state in Azure functions if you want to. The cherry on top is that you can invoke those functions from AL language directly.

As you see, it’s not exactly pink, but it’s not entirely black either.

As a matter of fact, I am excited about this new world, because it also opens up a lot of opportunities.

Okay, I don’t buy it now, now you are selling me feces tauri

No, I am not. I believe every word I said.

For a person who had built a lot of .NET-based stuff targeting NAV, and then had to maintain all that stuff across various deployments, .NET was a mess. Making your assemblies available on every machine that needs access to them is not as simple as it looks in the help files and how-to videos. And your C/AL code is certainly less portable and less contained with all those dependencies to stuff very external to it. Any time you had to move a solution with heavy dependencies on .NET, you could expect problems. And no, putting the assemblies into the database was not the solution; it was merely a different kind of repository for assemblies, no more, no less (actually, less, in my opinion, because it did come with caveats).

Not even having the possibility of having those external dependencies is a very good thing for the quality, portability and maintainability of your AL code. When everything is in AL files, and nothing lives in external assemblies that have to be handled separately, it’s so much easier to maintain all your code, both during development and during deployment. And even when you use Azure Functions, which are obviously not “contained” in AL, they are not a complicated kind of a dependency – they are merely a web service out there. When you move the code that depends on those from one box to another, there is nothing you have to do with the Azure functions specifically.

Conclusion

To wrap this up, if you don’t care about extensions, and you believe you never will, then you have nothing to worry about as far as .NET goes. You can continue using it happily ever after for as long as there is classic Development Environment available, and very like even into the foreseeable future.

But if you care about extensions, you should abandon the .NET stack even if you are firmly in the “v1” boat. “V1” will be gone come winter, and then – if you still have DotNet variables around – you’ll suffer. You should get ready. You should start replacing your DotNet variables with built-in AL types where you can, and you should let Microsoft know about which types you need where there are none yet in the AL language. And you should migrate all your custom .NET into Azure Functions if there is no way you could live with that being simple and pure AL.

And still, if you are in the first group, the one that has nothing to do with extensions, and still believes that sitting happily on stair 1 or 2 of Freddy’s architecture staircase is a smart thing to do, you can benefit from moving away from .NET. Why? Because that way you are 100% future proof. If at any point Microsoft cuts the support for .NET altogether, even for on-prem development in VS Code when it finally arrives, you won’t have issues migrating your code from C/AL to AL. And if, at any other point, you decide to turn your solution – whatever it is – into a D365-ready extension, then you’ll be happy it contains no DotNet stuff in there.

One final note – seeing .NET being on its way out of the NAV technology stack hurts me personally quite a lot. If you have followed my blog, you know that I absolutely love .NET, that I have dedicated so many posts to getting the most out of this amazing technology integration, which has always left quite a bit to be desired, but has still been an indispensable part of our arsenal for the past 8 years. But as I said in my last post, progress often requires you to take a step back so you could make a big leap forward, and I see .NET departure as being one necessary step to make AL a beautiful language it deserves to be.

Pingback: State of .NET Affairs - Vjeko.com - Dynamics NAV Users - DUG

Looking for some non-NAV non-Microsoft job to switch to… My 18-year NAV-career is over.

Sorry to hear that. Are you sure there is nothing left for you to do?

I’m not a fan (more like hater) of “centralized computing” aka “cloud”. This is our past, not a future. We had it all already many years ago, in “evil IBM era”. People just don’t understand this. Youngsters never had it and think this is “new”.

Also Microsoft kills everything it touches. So many fails last several years, it’s just ridiculous. NAV was a flexible, customizable, customer-driven ERP. Not like monster dinosaurs such as SAP, JDE, PeopleSoft, ORACLE. Microsoft never had NAV idea. What we see now? Ordinary browser-based (even in “windows client” which is just another kind of browser) average ERP. Nobody even can say why it’s better than competitors just because there is nothing to say.

So no, I don’t see any future for NAV, even in D365 shape.

I have to disagree with you, and I don’t think this is just a clash of opinions. There is a major difference between a mainframe computing paradigm and cloud computing. Mainframe is a thing of past, and there I agree 100%.

NAV is still as flexible as it was earlier, and in certain respects even more flexible. Yes, there are a number of things you can’t do, but most of them belong in the “to do” category, not the “trash bin” category.

Compared to where NAV was even 5 years ago when we didn’t have upgrade codeunits, and every most customers never ever even thought of upgrading because it was as scary as scary gets, to what we have today with extensions upgrading live, in-place, without even having to restart the client, I think the “new” NAV is way better.

The kind of “flexibility” you have at Stair 1 (of Freddy’s staircase) and the one you have at Stair 6, are light years apart, and in favor of Stair 6, not the opposite way around.

Thanks for being candid. I, for one, am sure the show is over… predicted it sometime in 2012, my last attendance year of NavTechDays, And now the 5 years of remaining momentum are over, too. What MSFT does and what the user (customer) requires are two completely different things now. So… time to go looking for some other platform. The only good news is that we’re talking about ERP. So all the business and process knowledge isn’t completely lost.

A shoe manufacturer sent two salesmen to a poor country to investigate market opportunities. The first one calls back and says: “No business for us here, nobody uses shoes.” The second one calls back all excited and says: “Whoa, what an incredible opportunity: nobody has shoes yet!”

Customers come in many sorts, and what one requires, the other may not. The market for customers who will trade in degree of fit for an affordable rent-based price is huge. Huge. Did I say huge? Well, huge. 97.5 of all companies in the world are under 10 employees in size. NAV and most other ERP software vendors have so far operated in that 2.5% of all customers market segment. Think about that. I think the good days are ahead, not behind.

In other words: MSFT is willing to abandon existing customers for a new (lower) market segment. Well, what can I say… this doesn’t cut it for me. IMO, a vendor will pay dearly for this kind of behaviour. Especially if the actions look so ham fisted. And it burns down the existing partner structure. Not a good scenario to be in.

Good post (again)! QUestion what about the multiplatform addin controls? Will those also be out of scope in the V2 app development?

When you say “multiplatform”, do you mean JavaScript control add-ins? .NET control add-ins will be gone because in D365 you have only the Web client(s) available (plus the universal client), and there is no Windows client, and in all likelihood there never will be a Windows client for D365.

In the current iteration of AL, you cannot create JavaScript control add-ins, but this is simply because the thing is still in development. We will definitely be able to create them, embed them into “v2” navx files and deploy and run them from D365.

TNX. One problem less. We just have migrated our .Net control Addins to JavaScript control Addins. (using your framework!!)

Thanks! Glad to know it works 🙂

Pingback: State of .NET Affairs - Microsoft Dynamics NAV Community

Great post Vjeko

Thanks!

Great post Vjeko – thought provoking on how to do development. If we know this change is coming, we might as well start acting on it. One question – do you know if this “no .Net”-restriction applies to what MS refers to as “Rusty Brown” D365 – where we as ISVs can create our own “sandbox 365″… sort of a replacement for Managed Service. The reasons for removing it are still in place, but the mecanism (VS Code) isn’t – yet.

Honestly, I don’t know. I would assume not, but I have no definite information on this. However, I wouldn’t count on this being supported even in “rusty brown” scenarios.

While I agree with your reasoning on why .NET interop should be kept out of D365, I do hope Microsoft will keep it in the “traditional” NAV (should we call it that? – “classic” is already taken 🙂 ) for the foreseeable future. IMHO, adding .NET interop to NAV was the best thing that happened to the platform in years.

We agree here completely, Vytenis 🙂 I also believe that .NET interop was the best thing in the NAV platform, and I also hope they keep it for traditional deployments.

But, I will do my best to start replacing all my .NET stuff with “other” stuff, most likely Azure Functions.

I can understand that Microsoft is shifting “everything” to the cloud where “everything” tends to be realized via “microservices” (Azure Functions)… It is a trend and MS needs to follow important trends.

On the other hand, I need to agree with Alexei – all those changes could break the community, especially those experts with many years of experience. They are focused on the client needs and know NAV perfectly on the functional level. Because of the pace of the work they can`t digest all those changes that come very frequently.

Of course, we have developers and consultants and it seems to be a very wise to keep them split to be able to maintain the knowledge up to date. But I know many of the best doing both – consultancy and development as well. And I know that those are considering to quit…

From my point of view, the new client (Windows, web-based) is really nice but the end users were more efficient when working with the old one (the classic one).

Now, you can think I am a dinosaur, but the opposite is true. I really like progress, I felt very lucky when we received .NET interop in NAV (I use it almost every day). I like the 3 tier architecture. But I am also worried that too many radical changes could break something valuable.

PS: This is just my opinion, nothing too much valuable comparing the quality of your post – anyway, great work!!!

Pingback: ArcherPoint Dynamics Developer Digest - vol 146 - Microsoft Dynamics NAV Community

Hi,

So… investing in C#/Visual Studio-knowledge and -experience is still appropriate to be future ready?

When blending out using .NET for amendments to the Front-End and implementing local hardware, then Azure Functions will take over the job? For example XML-processing, data decryption/encryption, .. ?

Kind regards,

Hannes

Yes, for some of those tasks you’d have to use Azure functions. We may get XML, we may get data encryption eventually on AL language level. But for now, if you want to go to D365, it would have to be hosted in a service somewhere (Azure functions, etc…)

But investing in C# and .NET in general is still a sound investment. You don’t need it for on-prem, but you need it to build your services that you host and then invoke from AL.

Another great post Vjeko!

Do you have some tips how to map all places (customized objects) where I used dotnet, so I can start with converting codes to AL (removing dotnet)?

Sorry, I don’t have any smart ideas here. Perhaps export all as text and then use some regex to locate objects with dotnet declarations…

A few days ago I started experiments with swagger. After some initial complications (configurations of the environment, installing Java and related tools et.) I have finally started with the CodeGen and right now I can convert some API examples delivered with Swagger.

Right now the output (automatically generated AL code) is still pretty useless but I can see this could be some sort of solution.

Microsoft Azure Functions will support (I think this is still in beta) OpenAPI format and Swagger is helping to shape this format actively.

You can see the outputs here (I repeat, nothing useful but I can see the CodeGen is very flexible):

https://twitter.com/vanakjakub/status/908035344020463616

https://pbs.twimg.com/media/DJn80vaWsAMxn5n.jpg:large

I will post the initial version into the forked version of the Swagger Codegen in one of the following days (maybe next week).

Haven’t played with Swagger myself, but I am sure it can be put to good use here.

Thanks Jakub, for your recommendations. I’ll surely explore swagger.

Also, thanks Vjeko for your responce.

At the end, when I didn’t find better solution on web, I’ve created procedure in C# that use .txt file (with nav object) and export all usage of dotnet variables in some .tsv file. For this purpose, I needed to use dll’s from NAV installation folder (Microsoft.Dynamics.Nav.Model…).

Pingback: Code alterations in D365BC - What you need to know - Hannes Holst

Pingback: Code alterations in Dynamics 365 Business Central – What you need to know - Microsoft Dynamics NAV Community