Marketing is nice as long as it matches the reality. With Microsoft Dynamics NAV 2013, Microsoft has promised a lot of improvements, but how well does NAV 2013 stand the reality test?

Marketing is nice as long as it matches the reality. With Microsoft Dynamics NAV 2013, Microsoft has promised a lot of improvements, but how well does NAV 2013 stand the reality test?

Apparently, outstandingly well.

Over the past two days, I have intensively tested NAV 2009 and NAV 2013 through a series of five different tests that measure different aspects of NAV data handling. My conclusion is clear: NAV 2013 is faster than any NAV you have ever seen, including the Classic client on the native database.

Continue reading to find out more about my findings and testing approach.

Is This Some Kind Of A Trick?

No, this is not a trick. It’s for real.

Several days ago I wrote about performance improvements in Microsoft Dynamics NAV 2013, and then got a comment that it all looks nice in theory, but that NAV 2013 is actually slower than NAV 2009. Per Mogensen of Mergetool.com has done some testing and published a video demonstrating the results.

I’ve reviewed the video, and I’ve noticed a couple of possible issues with how the performance was measured, so I decided to do check for myself. My results show something completely different: not only NAV 2013 is faster than NAV 2009, it’s also faster than the Classic client on the native database – kind of a holy grail of NAV performance.

And then I double-checked with Per, and he confirmed to me that he also noticed a couple of problems himself. He has repeated the tests, and his tests now also show great improvement in NAV 2013. His updated video is here.

But, let’s continue with my results.

The Racing Horses

To find out how fast NAV 2013 really is, I’ve compared it to other flavors of NAV. The racing horses were:

- Microsoft Dynamics NAV 2013 RoleTailored Client

- Microsoft Dynamics NAV 2013 Web Services

- Microsoft Dynamics NAV 2009 RoleTailored Client

- Microsoft Dynamics NAV 2009 Web Services

- Microsoft Dynamics NAV 2009 Classic Client – SQL Server Option

- Microsoft Dynamics NAV 2009 Classic Client – Native Database Option

So, quite a jolly bunch. I would love if I could also have tested the performance of 2009 NAS on both native database and SQL Server, but I chose to let it pass.

The Environment

All of the applications have had exactly the same operating conditions, under exactly the same environment and system configuration settings. The following are the system specifications:

- Intel Core i7-2620M CPU (Quad Core)

- 8 GB of RAM

- OCZ Vertex2 SSD drive

- Windows 7 Ultimate, Service Pack 1, 64-bit

- Microsoft SQL Server 2008 R2, Standard Edition, 64-bit

The machine was physical, not virtual. All flavors of NAV were installed on the same physical instance.

The Tests

All of the six applications had to endure the same testing conditions, and have run the following tests:

- Creating, releasing, shipping and invoicing a sales order, 100 times in a row (the original Per Mogensen’s test)

- Iterating through all customers, vendors, and items, 500 times in a row

- Iterating through a filtered list of customers, vendors, and items, with an inefficient filter over a text field, 500 times in a row

- Iterating through a unique list of G/L accounts from the G/L Entry table, 500 times in a row.

- Manually summing flow fields of all customers, vendors, and items, by calling CALCFIELDS on each row, 500 times in a row.

In addition, under NAV 2013 I’ve run the following test as well:

- Manually summing the balance and inventory flow fields of all customers, vendors, and items, respectively, by calling SETAUTOCALCFIELDS before the iteration, 500 times in a row.

The Methodology

Before each of the tests, I prepared the environment by doing the following:

- I stopped all instances of NAV and closed all clients and made sure no applications were running.

- I created a new empty database.

- I restored the W1 database into the just created database.

- I started the relevant service tier and clients, and then ran all the tests three times to warm the system up.

- I cleared the time logs to eliminate the warm-up results, and make sure they don’t distort the test results.

- Closed any unnecessary applications (e.g. the Classic Client before using the RTC to run the tests) to ensure that only the environment which is running the test is open.

Then, after the environment was ready, for each of the tests I did the following:

- Ran the test three times in a row.

- Copied the results from the log table into Excel.

The warm-up is indeed slower under NAV 2013, than under any other system, and my methodology disregarded the warm-up measurements. Warm up times mostly don’t show anything useful.

Each of the tests records the time right before the test starts, and then again right after it ends. The time difference is then logged into the database.

I measured the time by creating two DateTime instances, setting them to current system time, then subtracting the start time from the end time. This gives the duration in milliseconds. In addition to this, under NAV 2013 I’ve added another measurement method: the .NET System.Diagnostics.Stopwatch class, just in case – if there is anything flawed with NAV’s time variable in 2013, certainly nothing will be wrong with the .NET Stopwatch. As expected, there was no difference between what NAV calculated and what the System.Diagnostics.Stopwatch measured.

In the results, all measurements I present are in milliseconds, and in all test results I’ll show, less is better.

The Results

Finally, we get to the point which I believe you await as much as did I: the results. Let me present them test by test.

1. Sales Orders

In the Per Mogensen’s tests, the NAV 2009 Classic Client on a native database is the winner of this test. At pure C/AL level, NAV 2013 there performs almost as fast as Classic on native, but the RTC under NAV 2013 is still the slowest. My results are very different. I can’t be 100% sure why, but I’ll give a couple of thoughts at the end of this post.

In any case, these are the measurements I got:

| 2013, Web Services |

5,169 |

| 2013, RoleTailored Client |

6,186 |

| 2009, Classic Client, Native |

7,467 |

| 2009, Web Services |

11,778 |

| 2009, Classic Client, SQL |

14,420 |

| 2009, RoleTailored Client |

14,690 |

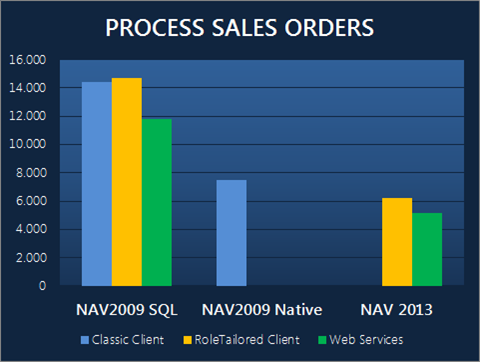

A picture is worth a thousand words, so here it comes:

Image 1: Sales Orders test results

As I expected, the Web Services perform faster on both 2009 and 2013, because there is no user interface and only the NST is involved in code execution. Under Web Services, NAV 2013 performs about 44% faster than the fastest breed of NAV ever – the Classic Client on a native database. Stripped off the burden of a UI, NAV 2013 Web Services practically demonstrate pure SQL Server performance, and SQL Server is faster than ever before, just as it says on the tin.

But NAV 2013 RTC also showed respectable performance. It performed 21% better than NAV 2009 Classic Client on native database. I kind of didn’t expect this to occur, because the Classic Client on native database is a native ISAM system and NAV business logic is entirely optimized to fly on it. What astonishes me is 128% improvement of NAV 2013 over NAV 2009 in Web Services, or 137% improvement in RoleTailored Client performance. That’s truly amazing.

Obviously, NAV 2013 provides considerable improvement over NAV 2009.

2. Repeated Read

This test measures the capability of a client to iterate through a series of records. Iteration is something that C/AL code frequently does, and where any flavor of NAV somewhat sucked under SQL Server, as compared with the sheer performance of the native database. Again, native database and C/AL as a language are optimized precisely for this kind of access, and it was never a wonder that the native was a king here.

However, NAV 2013 seems to have just deposed that king:

| 2013, Web Services |

16 |

| 2013, RoleTailored Client |

25 |

| 2009, Classic Client, Native |

644 |

| 2009, Web Services |

8,081 |

| 2009, RoleTailored Client |

8,133 |

| 2009, Classic Client, SQL |

8,637 |

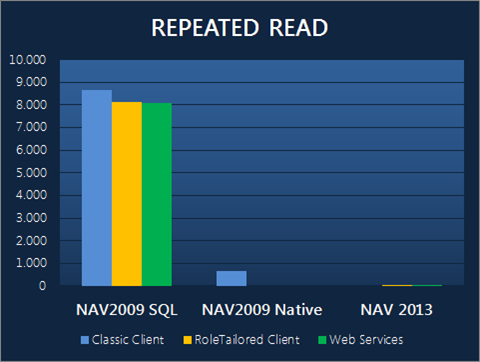

Graphically, this is how it looks:

Image 2: Repeated Read of Customers, Vendors and Items

NAV 2013 is lightning fast here, and no wonder why: the caching. While NAV 2009 on SQL Server had to maintain a series of cursors, NAV 2013 ran a single T-SQL query, and then cached the records for subsequent reads. It simply outperforms everything.

I don’t want to spend any time comparing the speed of NAV 2013 with the speed of NAV 2009 native; what I want to do is point out the speed improvement by a factor of more than 400x over NAV 2009 on SQL. How cool is that?

3. Repeated Read of Filtered Tables

The beauty of this test is that it shows how well a system copes with a complex filter. I’ve set the filter on Name and Description columns on Customer, Vendor and Item table respectively to this: @*a* (it searches for letter a anywhere in the field, in a case-insensitive way).

This filter can’t make meaningful use of any key, so what shall win or lose this race will be the capability of the database management system to handle such a process on foot.

Again, NAV 2013 played this one coolly.

Here go the results:

| 2013, Web Services |

20 |

| 2013, RoleTailored Client |

24 |

| 2009, Classic Client, Native |

515 |

| 2009, Web Services |

5,720 |

| 2009, RoleTailored Client |

5,741 |

| 2009, Classic Client, SQL |

6,178 |

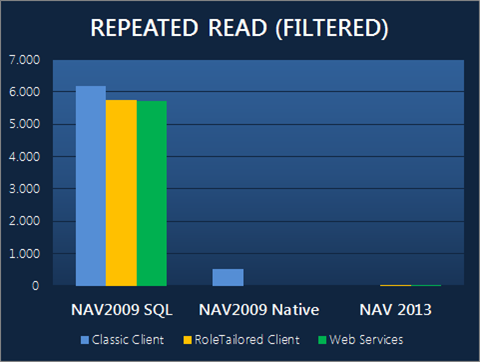

This is the graph:

Image 3: Repeated Read of Filtered Tables

While the variances in NAV 2009 SQL Server flavors are insignificant, the improvement of NAV 2013 is again verging with insane. It’s obvious that the cache kicked in here bit time, but I also assume that there may be some .NET-level code optimization that made this kind of thing possible.

4. Reading Unique G/L Account Numbers from G/L Entry

Now, this was a tricky one. It uses several concepts, combination of which is a total no-brainer for the ISAM-based NAV 2009 on native, but verges on rocket science for anything SQL-related. It was literally the most inefficient thing to do to a SQL database in NAV, and running a piece of code such as this literally smothers SQL by causing it to drop existing and create new cursors all the time.

The algorithm is as follows:

- Set the key on G/L Account column

- Find the first G/L Entry row

- Set a filter on the G/L Account column to that G/L Account which is currently selected

- Find the last G/L Entry with this filter applied

- Remove the filter on the G/L Account column

- Repeat 3 to 6 until there are more G/L Entry rows

In previous versions, steps 2, 4, and 6 drop existing (except for the first iteration) and create new cursors in SQL Server, and I was curious to find out how well SQL coped with this task now that cursors are gone, and MARS is taking their role. There are much less read rows in this test than in the simple repeated read, and if you understand how ISAM works, and how SQL works, you should also expect ISAM to do this with no speed penalty over a simple iterative read, while you can expect SQL to run way slower than a simple iterative read, no matter which approach it takes.

And this is exactly what the results show:

| 2009, Classic Client, Native |

478 |

| 2013, Web Services |

1,129 |

| 2013, RoleTailored Client |

1,142 |

| 2009, Web Services |

11,029 |

| 2009, RoleTailored Client |

11,113 |

| 2009, Classic Client, SQL |

11,555 |

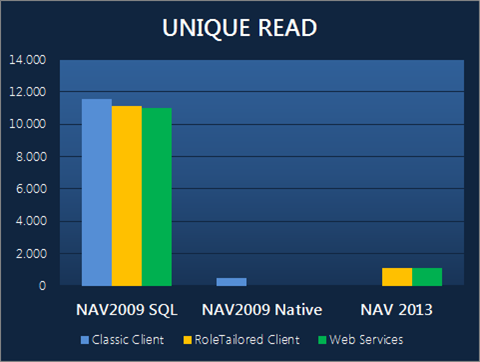

And then, the picture:

Image 4: Repeated Read of SIFT-Filtered Tables

Now, before jumping out from your seat and shouting “gotcha!”, think of this test once again. NAV 2013 is almost 10x faster than NAV 2009 here, and whatever it did deep there in its engine is close to a miracle. If it did caching to attain this speed, that caching must be pretty smart, because this piece of code was accessing some very small sets and jumping around the records like crazy.

While I have a very plausible explanation what made NAV 2013 win all previous tests, I don’t have a faintest idea what kind of magic made it perform this well here. Yes, it is slower than native, but this was kind of like making a Formula One compete in a rally.

Catch this: native is fully optimized to do this kind of access, and doing this is no smarter for it than doing the simplest kind of data iteration. As a matter of fact, since there were less rows to read, this one should have been faster than the repeated read test. And it was. At the same time, NAV 2009 on SQL was slower here, because this put much more pressure on it, and it had to struggle. And struggle it did.

Yet, NAV 2013, while still struggling, has shown an incredible performance improvement to make even this kind of thing perform well. Quite a job, Microsoft!

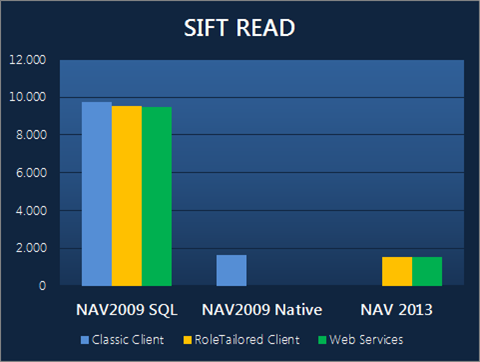

5. SIFT Read

I measured how various systems perform with SIFTs, in a scenario quite common in real life: iterating through a set of data and calculating flow fields for each row. NAV does this in many situations, and I was very curious to find out how fast NAV 2013 would be here, because of the many changes Microsoft has done in handling the flow fields in NAV 2013.

Here are the results:

| 2013, RoleTailored Client |

1,517 |

| 2013, Web Services |

1,518 |

| 2009, Classic Client, Native |

1,638 |

| 2009, Web Services |

9,500 |

| 2009, RoleTailored Client |

9,552 |

| 2009, Classic Client, SQL |

9,745 |

Or graphically:

Image 5: SIFT Read

When handling flow fields, NAV 2013 performs slightly better than native ever did, about 8% faster. This is quite a feat, if you have in mind that native handles this functionality again, well, natively, by building the flow field information right into indexes, something that SQL never could.

Okay, I assume that some serious caching took place here as well, but still, caching or not, the whole system performs better and faster in NAV 2013. Compared to SQL Server flavors in NAV 2009, the improvement of 532% is quite amazing, and even more so if you think that probably everybody thought that Microsoft has hit the limit with replacing SIFT tables with indexed views in 5.0 SP1. With that obviously not having been a limit at all, I now wonder shall we experience even more improvement here in the future?

5a. SETAUTOCALCFIELDS

Finally, I ran the same test as the previous one, with the SETAUTOCALCFIELDS. I expected serious improvement, but at average of 1,466 milliseconds, this test performed practically only insignificantly faster than the previous one. I expected this one to show the real improvement over the traditional CALCFIELDS approach, but it stubbornly declined. I can’t explain this, but hey, let’s not get too picky ![]()

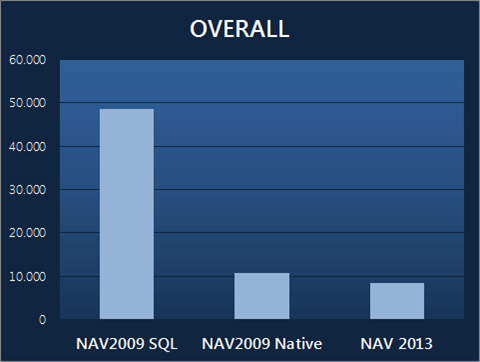

Overall Results

When you add all of the figures above together, the cumulative results demonstrate that Microsoft Dynamics NAV 2013 outperforms its previous incarnations, including the so-far unbeatable Classic Client on a native database.

On average, this is what it took the three clients to execute all tests:

| 2009 SQL |

48,636 |

| 2009 Native |

10,743 |

| 2013 |

8,374 |

And the last picture of the day:

Image 6: Overall Results

Obviously, the improvements that NAV 2013 promises are not just plain words, as these test results show. The overall performance is about 28% better than with the NAV 2009 Classic Client on a native database, and about 480% better (that’s almost 6x performance improvement!) than with NAV 2009 under SQL Server.

However, this is only a part of the story. There is another one: concurrency. Performance is always welcome, but performance is not what has been preventing NAV to scale as much as, for example, AX could. I wonder if Microsoft will release a hardware sizing document that would estimate some kind of the upper limit for vertical scalability of NAV 2013. The last time we got such numbers from Microsoft was with version 5.0 SP1, when it was set at 250 concurrent users.

Of course, any estimates of the kind are comparing apples to oranges, anyway, because at that number of users, the application is probably always heavily customized, and the actual upper vertical scalability limit will invariably depend on a very complex set of parameters, and can be determined only on a case-by-case basis.

I would’ve loved to have done concurrency tests together with the performance tests, but I may do that another time. However, based on the figures I see here, I dare estimating that everything else being equal, concurrency levels can at least be doubled in any given NAV 2013 deployment, over an equal NAV 2009 deployment.

But What About The Other Test?

So, why do Per Mogensen’s test show somewhat different results? On the C/AL level, hist test is very consistent with my measurements with Web services, but in Per’s tests, NAV 2013 performance with RTC is still inferior to all other clients and platforms.

I can’t tell for sure, but I’ll give my best guess:

- Virtualization: The systems were comparable, but the tests were run under different virtual machines, and the virtual hypervisor in charge might have redistributed resources, or other virtual machines were doing some cleanups while NAV 2013 was running, or a whole range of other things might have happened.

- Hardware: The RTC is a .NET application, and depends a lot on hardware on a machine to execute all the things that .NET applications do: just-in-time compilation during warm-up, and talking to video drivers at run time. Since it was a virtual box, maybe the virtual hardware causes troubles with .NET applications talking to it, while it performs better when Win32 applications (as the Classic client) are talking to it.

- Warm-up: While it certainly should be enough to run 100 sales orders through the create-release-ship-invoice cycle to warm a system up, I still think that a thorough warm-up is required for any kind of benchmarking. The warm-up time itself should be disregarded as it is no measure of either real performance under pressure, or the scalability. To determine if the system is properly warmed up, you need to keep running warm-ups until you see no significant performance between the two runs. Only then you can start measuring. The minimum number of runs to determine this condition is three runs.

Don’t Take My Word For This

So, who do you trust, Per or me? Neither one! Please, don’t just take my findings for granted. Do the measurements yourself.

Here, I’ve attached the objects that I’ve used to run the benchmark, so you can run the same tests on your own machine, and see your own results. I am really curious about the results you’ll get.

So, download the objects:

The reason why there are three distinct sets of objects is that NAV 2013 uses .NET Interoperability in addition to system time to measure time, and that native doesn’t use role centers. Everything else is exactly the same.

(Just in case you need it, here is also my Excel sheet with testing results and charts.)

Run the tests, and then come back here and share your findings. I’d love to hear from you!

Pingback: Benchmarking Results: NAV 2013 Outperforms All Previous Versions - Navigate Into Success - NAV Non-Technical Blogs - Microsoft Dynamics Community

Pingback: Benchmarking Results: NAV 2013 Outperforms All Previous Versions – 6/25, Navigate Into Success | Partner Compete

Vjeko,

I love to see this kind of information build on facts. Thanks!!

Great effort, Vjeko. Thanx for sharing!

Thanks for sharing !

Impressive work ! Now I’m really looking forward to get some time to play with the 2013 beta 🙂

Could you please try to create and post 100 sales headers each from 2 NSTs against the same database. I heard that NAV7 avoids locks.

Cheers, Steffen

Impressive, man! thanks!

Did you try the NAV2009 installation with the gcServer tag in the Microsoft.Dynamics.Nav.Server.exe.config?

If not, do you think the NAV2009 can experience the same performance rating with the gcServer tag?

this all is very nice, but still, how do you make UI faster?

Thanks everyone for the comments!

Alex: I tried it, and the gcServer tag doesn’t improve performance on NAV 2009. According to Microsoft it should only improve performance in concurrent scenarios, and this test was pure performance test, without any concurrency testing, that’s why it probably doesn’t help. I also realized that the tag is on by default in NAV 2013 beta.

QQ: I don’t think you can make UI much faster, but do you really think it is slow? It’s way faster than it was in NAV 2009, and obviously responsive enough when everything is cached, to yield better performance than classic.

Steffen: I tried, and there are deadlocking issues. I’ll cover this in another post.

Amazing! Thanks for the effort!

Pingback: Benchmarking Results: NAV 2013 Outperforms All Previous Versions | Pardaan.com

Hi Vjeko,

Love your blog/posts, but these testing methods have a few flaws in them with respect to how SQL Server caching works. Email me and I’ll explain why in case you wish to make a note about real-world performance expectations.

Wayne

Hi Wayne,

Thanks! I’ll e-mail you, but you could have also left the details in here for everybody else. I certainly wouldn’t mind 😉

Pingback: NAV 2013 performance | Metaphorix

I found your blog by pure luck, very interesting test you have done here, thanks for sharing…

Bravo Vjeko, this is really a great piece of work! Bookmarking this 🙂

Pingback: Performance on NAV 2013 - ArcherPoint Developer Blog - NAV Technical Blogs - Microsoft Dynamics Community

Bravo Vjeko!

As much as I appreciate the results – there is one thing that neither Per nor you seemed to have tested: printing of document reports such as sales orders, invoices, maybe together with copies.

Whereas simple list reports are very fast (as long as you keep the RDLC dataset as small as possible), document reports cannot take any noticeable performance advantages from the current technology. Unfortunately our TAP customer discovered this, too.

This is not the fault of NAV 2013 itself but the RDLC technology that has not been invented to create complicated document reports -but should be considered as well when talking about overall benchmarks as printing document reports is a task each customer performes many times a day.

Nice post! But there’s no comparison that shows the performance of NAV 2013 with multiple users posting simultaneously. The tests above were all carried out with a single user, would be nice to see how NAV 2013 handles concurrency.

Dipo: unfortunately, I don’t have any results here, yet. I tried something, and it didn’t yet look too good, and I didn’t have time to check whether it was my test or something else, as it gave equally poor results on NAV 2009 (and I know from practice that better results can be achieved). I’ll wait for the RTM version and then release the updated test results for the performance levels, and do some new tests for concurrency.

Thanks Babic.

Could you also make Wayne’s suggestions available here.

I’ll make sure to do that. Thanks!

Hello again Babic. Could you please mail me your email address. I have some questions on NAV 2009 I would like to ask.

Pingback: (my struggle in) “believing” Microsoft Dynamics NAV 2013 (NAV 7) « spot in the globe

Pingback: NAV2013 Beta to RTM - Some Striking Code Changes #2: FIND - Van Vugt's dynamiXs - NAV Technical Blogs - Microsoft Dynamics Community

Hi Vjekoslav,

In your book “Implementing Microsoft Dynamics NAV 2009” on page 101 you talk about an RTC model of departments, roles, process groups and tasks. You recommend downloading a diagram of two views, people and departments, and departments and work from

http://www.microsoft.com/dynamics/product/familiartoyourpeople.mspx

This url is no longer valid. I was not able to find the diagram. Could you help me find it?

Thank you,

Thomas

Thomas,

I’ve googled a bit and fond only this on public Microsoft websites: http://ie.microsoft.com/testdrive/Graphics/MBSCustomerModel/Default.html

However, if you have access to Partnersource, try searching for “Microsoft Dynamics customer model”, or maybe being more direct and mention couple of roles from the poster, like Sean, Viktor, Susan, Isaac… I hope this helps.

Pingback: Kriki’s Dynamics NAV blog » NAV2013:Database Options

Great stuff, Vjeko!

Interesting reading as always.

Many thanks for sharing!

NAV rocks 🙂

Hai,

can you tell , need to achive this kind of performance any kind of server configurations cheanges required.

Because i didn’t get that much performrnce in navision 2013

Anil, I used an i7 with 8gb of RAM and an SSD drive.

Good post, Vjeko!

Thanks.

This was quite useful post and quite intresting to note various tests and results…

Hi

By any chance, have you done a similar comparison on NAV 2015? Or do you have your FOBS in TXT format?

Tomas

Pingback: Performance on NAV 2013 « RIS Plus Blog

Pingback: Performance on NAV 2013 - theDenSter